When Cloudflare accused AI search engine Perplexity of stealthily scraping websites and ignoring crawling restrictions this week, it seemed like a clear-cut case of AI misbehavior. Cloudflare, which provides security and performance services to millions of websites, has unique visibility into internet traffic patterns—giving the company unusual authority to call out bad actors. But the ensuing debate has revealed this isn’t a simple case of an internet watchdog catching a rogue AI crawler.

The controversy centers on a fundamental question that will only grow more pressing as AI agents flood the internet: Is an AI agent accessing a website on behalf of a user the same as that user visiting the site directly? Many people came to Perplexity’s defense, arguing that while accessing sites against the website owner’s wishes is controversial, it is acceptable. This debate will intensify as AI agents become more prevalent. Should an agent accessing a website on behalf of its user be treated like a bot or like a human making the same request?

Cloudflare, known for providing anti-bot crawling and web security services, conducted a test case. They set up a new website with a domain never crawled by any bot, configured a robots.txt file to specifically block Perplexity’s known AI crawlers, and then asked Perplexity about the website’s content. Perplexity answered the question.

Cloudflare researchers found that the AI search engine used a generic browser intended to impersonate Google Chrome on macOS when its web crawler was blocked. Cloudflare CEO Matthew Prince shared the research on X, stating, “Some supposedly ‘reputable’ AI companies act more like North Korean hackers. Time to name, shame, and hard block them.”

However, many disagreed with Prince’s assessment that this was bad behavior. Defenders of Perplexity on platforms like X and Hacker News argued that Cloudflare documented the AI accessing a public website only when a user requested it. One commenter wrote, “If I as a human request a website, then I should be shown the content. Why would the LLM accessing the website on my behalf be in a different legal category than my Firefox web browser?”

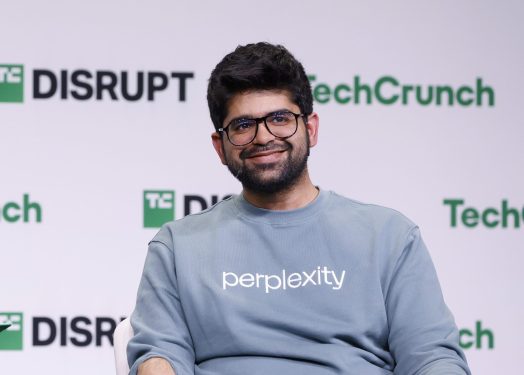

A Perplexity spokesperson denied that the bots belonged to the company and called Cloudflare’s blog post a sales pitch. Later, Perplexity published a blog post defending its actions, claiming the behavior came from a third-party service it occasionally uses. The post argued that Cloudflare’s systems fail to distinguish between legitimate AI assistants and actual threats.

Cloudflare countered by pointing out that OpenAI follows best practices, respecting robots.txt and not evading network-level blocks. OpenAI also signs HTTP requests using the proposed Web Bot Auth standard, a Cloudflare-supported initiative to cryptographically identify AI agent requests.

This debate unfolds as bot activity reshapes the internet. Bots scraping content for AI training have become a significant problem, especially for smaller sites. For the first time, bot activity exceeds human activity online, with AI traffic accounting for over 50%. Malicious bots now make up 37% of all internet traffic, including scraping and unauthorized login attempts.

Historically, websites blocked most bot activity due to its malicious nature, using CAPTCHAs and services like Cloudflare. They also cooperated with good actors like Googlebot, guiding it via robots.txt. Now, LLMs are consuming more internet traffic, and Gartner predicts search engine volume will drop by 25% by 2026. Currently, users click links from LLMs when ready to transact, benefiting websites. But if AI agents handle tasks like booking travel or shopping, blocking them could hurt business interests.

The debate on X highlights the dilemma. One user wrote, “I WANT Perplexity to visit any public content on my behalf when I give it a request!” Another countered, “What if the site owners don’t want it? They just want you to directly visit the site, see their stuff, and generate ad revenue.” A third predicted, “This is why I can’t see ‘agentic browsing’ really working—most website owners will just block it.”

The issue remains unresolved, reflecting broader tensions as AI transforms how we interact with the web.