The race to release world models is accelerating. AI image and video generation company Runway has joined a growing number of startups and major tech companies by launching its first world model, called GWM-1. The model operates through frame-by-frame prediction, creating a simulation with an understanding of physics and how the world behaves over time.

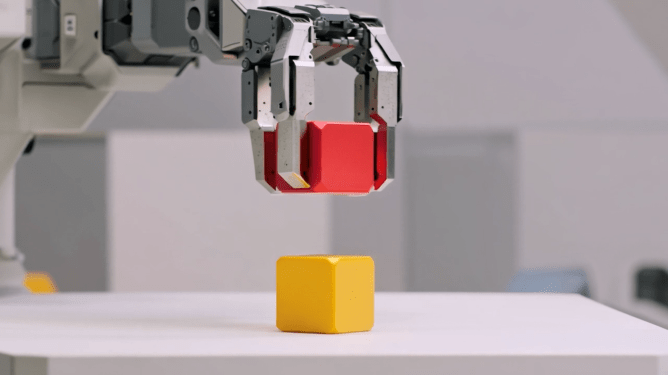

A world model is an AI system that learns an internal simulation of how the world works. This allows it to reason, plan, and act without needing to be trained on every possible real-life scenario. Runway, which recently launched its Gen 4.5 video model that surpassed both Google and OpenAI on a key leaderboard, states its GWM-1 world model is more general than competitors like Google’s Genie-3. The company pitches it as a tool for creating simulations to train agents in domains such as robotics and life sciences.

According to the company’s CTO Anastasis Germanidis, building a world model first required building a great video model. The company believes teaching models to predict pixels directly is the best path to achieving general-purpose simulation. At sufficient scale and with the right data, a model can develop a sufficient understanding of how the world works.

Runway released specific versions of the new world model: GWM-Worlds, GWM-Robotics, and GWM-Avatars. GWM-Worlds is an application that lets users create an interactive project. Users set a scene through a prompt or image, and as they explore, the model generates the world with an understanding of geometry, physics, and lighting. The simulation runs at 24 frames per second and 720p resolution. While useful for gaming, Runway says Worlds is also positioned to teach agents how to navigate the physical world.

With GWM-Robotics, the company aims to use synthetic data enriched with new parameters like changing weather conditions or obstacles. This method could reveal when and how robots might violate policies in different scenarios. Runway is also building realistic avatars under GWM-Avatars to simulate human behavior, entering a space where other companies have worked on human avatars for communication and training. The company notes that Worlds, Robotics, and Avatars are technically separate models for now, but it plans to eventually merge them into one.

Alongside the world model, Runway is updating its foundational Gen 4.5 model. The update brings native audio and long-form, multi-shot generation capabilities. Users can generate one-minute videos with character consistency, native dialogue, background audio, and complex shots from various angles. The model also allows editing of existing audio and adding dialogue, as well as editing multi-shot videos of any length.

This Gen 4.5 update brings Runway closer to competitor Kling’s all-in-one video suite, particularly regarding native audio and multi-shot storytelling. It signals that video generation models are moving from prototype to production-ready tools. The updated Gen 4.5 model is available to all paid plan users.

Runway stated it will make GWM-Robotics available through a software development kit. The company is in active conversations with several robotics firms and enterprises for the use of both GWM-Robotics and GWM-Avatars.