Nvidia unveiled a comprehensive new suite of robot foundation models, simulation tools, and edge hardware at CES. This move signals the company’s ambition to become the default platform for generalist robotics, similar to how Android became the operating system for smartphones. Nvidia’s push into robotics reflects a broader industry shift as AI moves off the cloud and into physical machines. This transition is enabled by cheaper sensors, advanced simulation, and AI models that can increasingly generalize across different tasks.

The company detailed its full-stack ecosystem for physical AI. This includes new open foundation models that allow robots to reason, plan, and adapt across many tasks and diverse environments, moving beyond narrow, task-specific bots. All of these models are available on Hugging Face.

The released models include Cosmos Transfer 2.5 and Cosmos Predict 2.5, which are world models for synthetic data generation and robot policy evaluation in simulation. Also included is Cosmos Reason 2, a reasoning vision language model that allows AI systems to see, understand, and act in the physical world. Finally, Isaac GR00T N1.6 is a next-generation vision language action model purpose-built for humanoid robots. GR00T relies on Cosmos Reason as its brain and unlocks whole-body control for humanoids so they can move and handle objects simultaneously.

Nvidia also introduced Isaac Lab-Arena at CES. This is an open source simulation framework hosted on GitHub that serves as another component of the company’s physical AI platform, enabling safe virtual testing of robotic capabilities. The platform addresses a critical industry challenge: as robots learn increasingly complex tasks, validating these abilities in physical environments can be costly, slow, and risky. Isaac Lab-Arena tackles this by consolidating resources, task scenarios, training tools, and established benchmarks like Libero, RoboCasa, and RoboTwin, creating a unified standard where the industry previously lacked one.

Supporting this ecosystem is Nvidia OSMO, an open source command center that serves as connective infrastructure. It integrates the entire workflow from data generation through training across both desktop and cloud environments.

To help power it all, Nvidia announced the new Blackwell-powered Jetson T4000 graphics card, the newest member of the Thor family. Nvidia pitches it as a cost-effective on-device compute upgrade that delivers 1200 teraflops of AI compute and 64 gigabytes of memory while running efficiently at 40 to 70 watts.

Nvidia is also deepening its partnership with Hugging Face to let more people experiment with robot training without needing expensive hardware or specialized knowledge. The collaboration integrates Nvidia’s Isaac and GR00T technologies into Hugging Face’s LeRobot framework, connecting Nvidia’s robotics developers with Hugging Face’s AI builders. The developer platform’s open source Reachy 2 humanoid now works directly with Nvidia’s Jetson Thor chip, letting developers experiment with different AI models without being locked into proprietary systems.

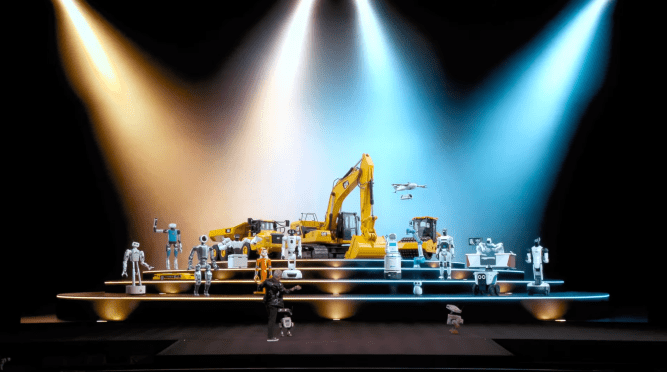

The bigger picture is that Nvidia is trying to make robotics development more accessible, and it wants to be the underlying hardware and software vendor powering it, much like Android is the default for smartphone makers. There are early signs that Nvidia’s strategy is working. Robotics is the fastest growing category on Hugging Face, with Nvidia’s models leading downloads. Meanwhile, robotics companies from Boston Dynamics and Caterpillar to Franka Robots and NEURA Robotics are already using Nvidia’s technology.