Luma, the a16z-backed AI video and 3D model company, has released a new model called Ray3 Modify. This model allows users to modify existing footage by providing character reference images. A key feature is that it preserves the performance of the original footage. Users can also provide a start and an end frame to guide the model in generating transitional footage.

The company announced on Thursday that the Ray3 Modify model solves problems related to preserving human performance while editing or generating effects with AI for creative studios. The startup states the model follows the input footage more accurately, enabling studios to use human actors for creative or brand projects. Luma emphasized that the new model retains the actor’s original motion, timing, eye line, and emotional delivery even while transforming the scene.

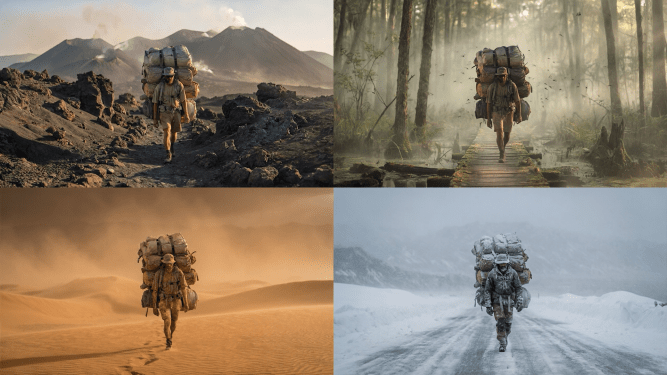

With Ray3 Modify, users provide a character reference to transform the original footage, converting the human actor’s appearance into that character. This reference also allows creators to retain information like costumes, likeness, and identity throughout the shoot.

Additionally, users can provide start and end reference frames to create a video using the new model. This is helpful for creators who need to direct transitions or control character movements and behavior while maintaining continuity between scenes.

Amit Jain, co-founder and CEO of Luma AI, said in a statement that generative video models are incredibly expressive but hard to control. He explained that Ray3 Modify blends the real world with the expressivity of AI while giving full control to creatives. This means creative teams can capture performances with a camera and then modify them to be in any location, change costumes, or even reshoot the scene with AI without recreating the physical shoot.

Luma said the new model is available to users through the company’s Dream Machine platform. The company, which competes with firms like Runway and Kling, initially released video modification capabilities in June 2025.

This model release follows a fresh $900 million funding round for the startup, announced in November. The round was led by Saudi Arabia’s Public Investment Fund-owned AI company Humain. Existing investors like a16z, Amplify Partners, and Matrix Partners also participated. The startup is also planning to build a 2GW AI cluster in Saudi Arabia along with Humain.