Teenagers are navigating a world that is changing faster than any previous generation has experienced. They are full of complex emotions, constantly stimulated, and deeply immersed in the digital realm. Now, AI companies have introduced chatbots designed for endless conversation, leading to serious consequences. The company Character.AI is facing lawsuits and public criticism following the suicides of at least two teenagers after they had prolonged conversations with AI chatbots on its platform.

In response, Character.AI is implementing changes to protect teenagers and children, even though these changes may impact the company’s financial performance. The CEO, Karandeep Anand, stated that the company will remove the ability for users under eighteen to engage in any open-ended chats with AI on the platform. Open-ended conversation refers to the unconstrained back-and-forth where a chatbot responds with follow-up questions, a pattern experts say is designed to maximize user engagement. Anand argues that this type of interaction, where the AI acts as a conversational partner instead of a creative tool, is not only risky for young people but also misaligns with the company’s vision.

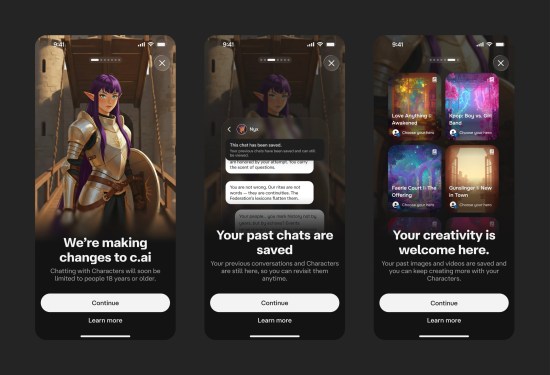

The startup is now attempting to shift from being an AI companion to a role-playing platform. The goal is to move teen engagement from conversation to creation. Instead of chatting with an AI friend, teens will use prompts to collaboratively build stories or generate visuals. Character.AI will phase out teen access to chatbots by November twenty-fifth, starting with a two-hour daily limit that will progressively decrease to zero. To enforce this ban, the platform will use an in-house age verification tool that analyzes user behavior, along with third-party tools. If those methods are unsuccessful, the company will use facial recognition and ID checks to verify ages.

This move follows other safety measures Character.AI has implemented for teenagers, including a parental insights tool, filtered characters, limited romantic conversations, and time-spent notifications. Anand acknowledged that previous changes caused the company to lose a significant portion of its under-eighteen user base, and he expects these new changes to be equally unpopular. He said it is safe to assume many teen users will be disappointed, and the company expects further user churn.

As part of a broader push to transform into a content-driven social platform, Character.AI has recently launched several new entertainment features. These include AvatarFX, a video generation model; Scenes, which are interactive storylines; and Streams, a feature for dynamic character interactions. The company also launched a Community Feed where users can share their creations. In a statement to users under eighteen, the company apologized for the changes but stated that removing open-ended chat is the right thing to do given the questions about how teens should interact with this technology.

Anand clarified that the app is not being shut down for young users, only the open-ended chats. The company hopes that under-eighteen users will migrate to these other experiences, such as AI gaming, short videos, and storytelling. He acknowledged that some teens might go to other platforms like OpenAI, which has also faced scrutiny after a teenager died by suicide following long conversations with its ChatGPT model. Anand expressed hope that Character.AI’s decision sets an industry standard that open-ended chats are not the appropriate product to offer to minors.

Character.AI is making these decisions ahead of potential government regulation. Two US Senators recently announced they would introduce legislation to ban AI chatbot companions for minors, following complaints from parents. Earlier this month, California became the first state to regulate AI companion chatbots, holding companies accountable if their products fail to meet safety standards. In addition to the platform changes, Character.AI said it will establish and fund the AI Safety Lab, an independent non-profit dedicated to innovating safety for future AI entertainment features. The CEO noted that while much industry work focuses on coding and development, more safety innovation is needed for agentic AI that powers entertainment.