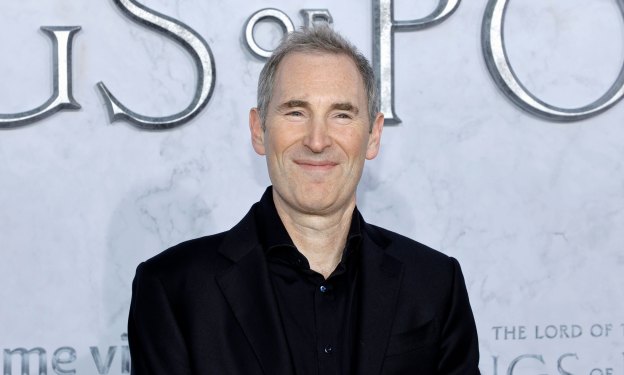

Can any company, big or small, really topple Nvidia’s AI chip dominance? Maybe not. But there are hundreds of billions of dollars of revenue for those who can even peel off a chunk of it for themselves, Amazon CEO Andy Jassy said this week.

As expected, the company announced during the AWS re:Invent conference the next generation of its Nvidia-competitor AI chip, Trainium3, which is four times faster yet uses less power than the current Trainium2. Jassy revealed details about the current Trainium that show why the company is so bullish on the chip.

He said the Trainium2 business has substantial traction, is a multi-billion-dollar revenue run-rate business, has over one million chips in production, and over one hundred thousand companies using it as the majority of Bedrock usage today. Bedrock is Amazon’s AI app development tool that allows companies to pick and choose among many AI models.

Jassy said Amazon’s AI chip is winning among the company’s enormous roster of cloud customers because it has price-performance advantages over other GPU options that are compelling. In other words, he believes it works better and costs less than other GPUs on the market. That is, of course, Amazon’s classic approach of offering its own homegrown tech at lower prices.

Additionally, AWS CEO Matt Garman offered even more insight about one customer responsible for a big chunk of those billions in revenue: no shock here, it’s Anthropic. Garman said, “We’ve seen some enormous traction from Trainium2, particularly from our partners at Anthropic who we’ve announced Project Rainier, where there’s over 500,000 Trainium2 chips helping them build the next generations of models for Claude.”

Project Rainier is Amazon’s most ambitious AI cluster of servers, spread across multiple data centers in the U.S. and built to serve Anthropic’s skyrocketing needs. It came online in October. Amazon is, of course, a major investor in Anthropic. In exchange, Anthropic made AWS its primary model training partner, even though Anthropic is now also offered on Microsoft’s cloud via Nvidia’s chips.

OpenAI is now also using AWS in addition to Microsoft’s cloud. But the OpenAI partnership couldn’t have contributed much to Trainium’s revenue because AWS is running it on Nvidia chips and systems, the cloud giant said.

Indeed, only a few U.S. companies like Google, Microsoft, Amazon, and Meta have all the engineering pieces to even attempt true competition with Nvidia. This includes silicon chip design expertise, homegrown high-speed interconnect, and networking technology. Remember, Nvidia cornered the market on one major high-performance networking tech in 2019 when CEO Jensen Huang outbid Intel and Microsoft to buy InfiniBand hardware maker Mellanox.

On top of that, AI models and software built to be served up by Nvidia’s chips also rely on Nvidia’s proprietary Compute Unified Device Architecture software. This software allows the apps to use the GPUs for parallel processing compute, among other tasks. Just like the chip wars of yesterday, it’s no small thing to rewrite an AI app for a non-CUDA chip.

Still, Amazon may have a plan for that. As previously reported, the next generation of its AI chip, Trainium4, will be built to interoperate with Nvidia’s GPUs in the same system. Whether that helps peel more business away from Nvidia or simply reinforces its dominance, but on AWS’s cloud, remains to be seen.

It may not matter to Amazon. If it is already on track to make multibillion dollars from the Trainium2 chip, and the next generation will be that much better, it may be winner enough.